Quick Start

Introduction

Before using the AI Employee, you need to connect to an online LLM service. NocoBase currently supports mainstream online LLM services such as OpenAI, Gemini, Claude, DepSeek, Qwen, etc. In addition to online LLM services, NocoBase also supports connecting to Ollama local models.

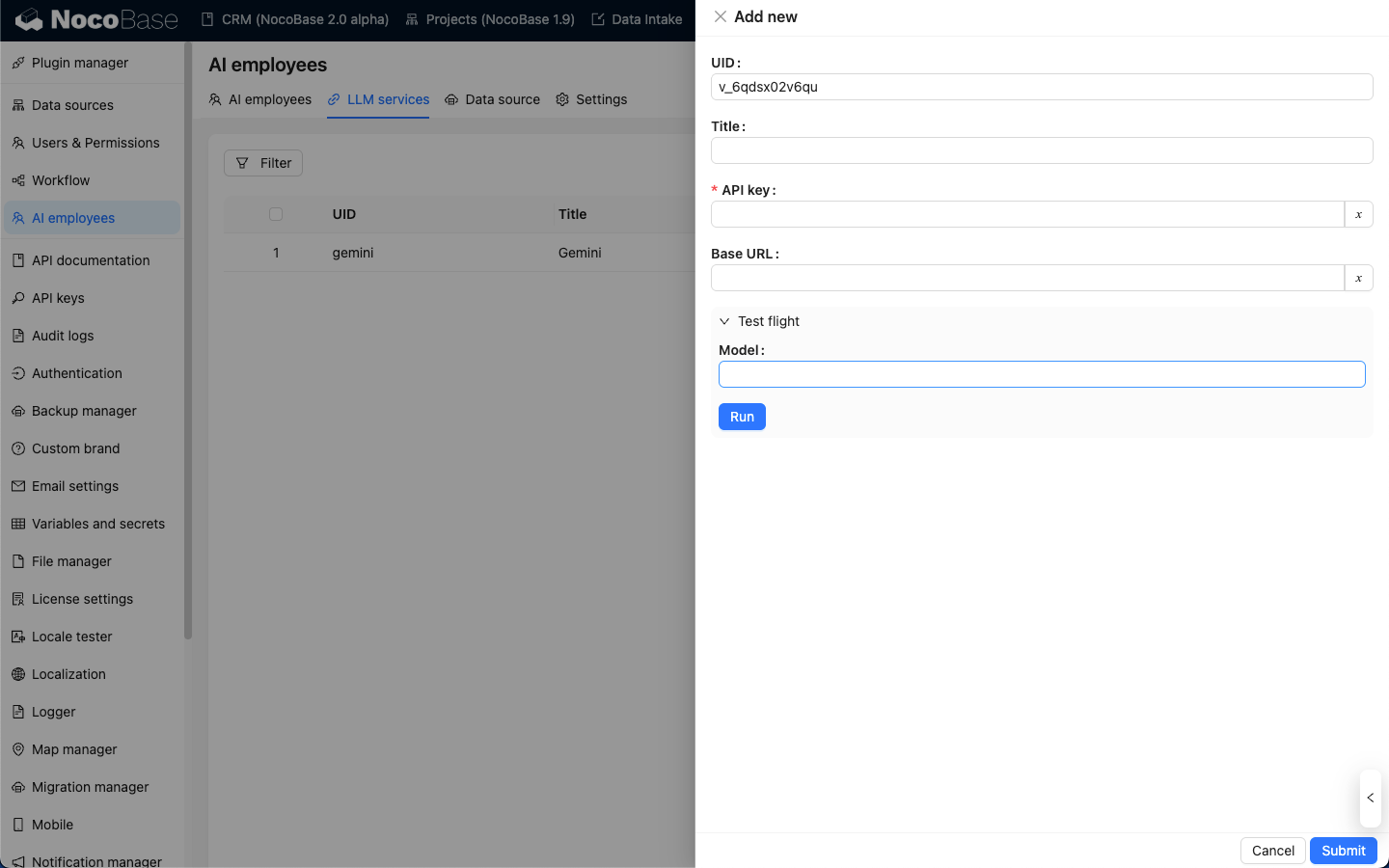

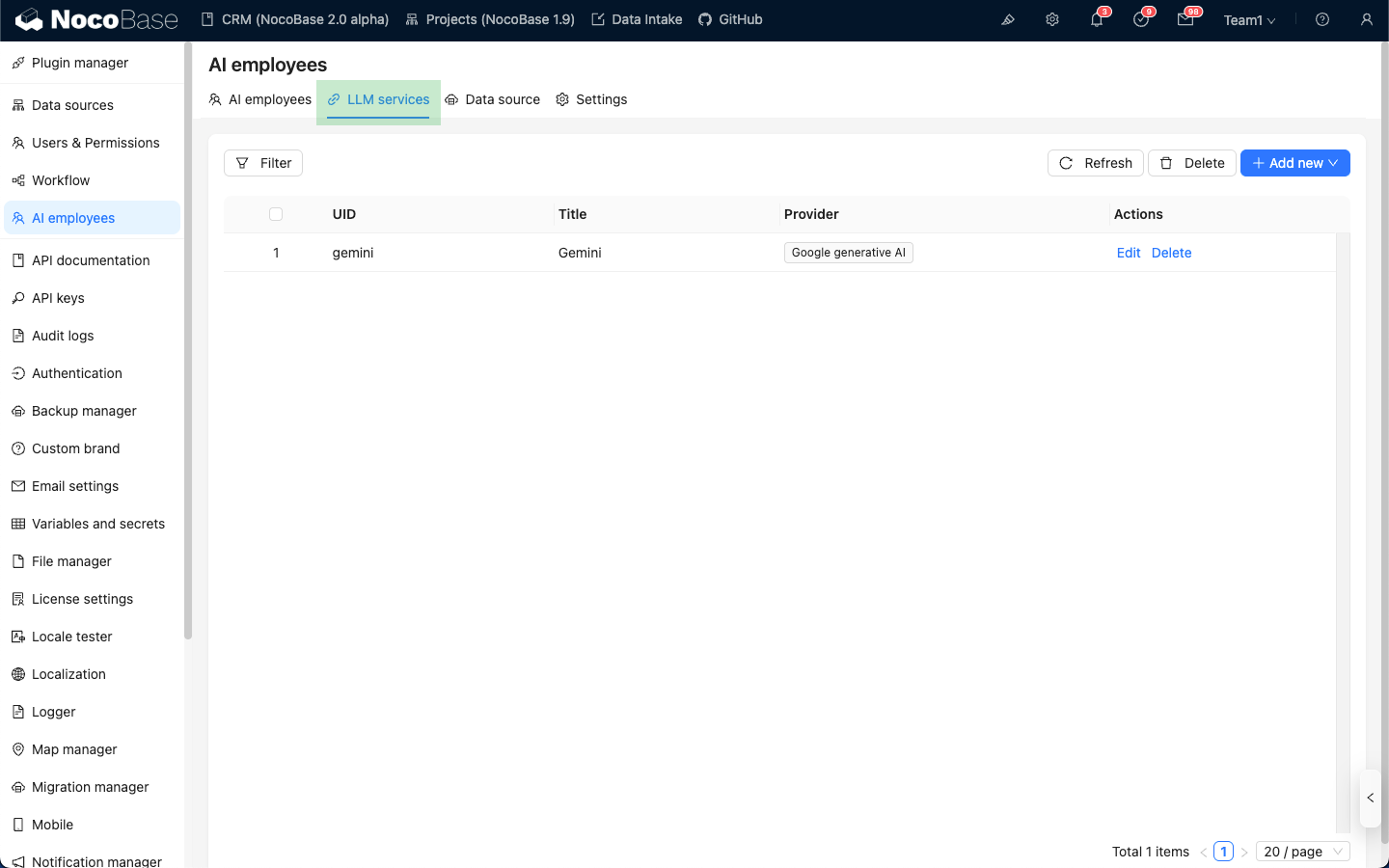

Configure LLM Service

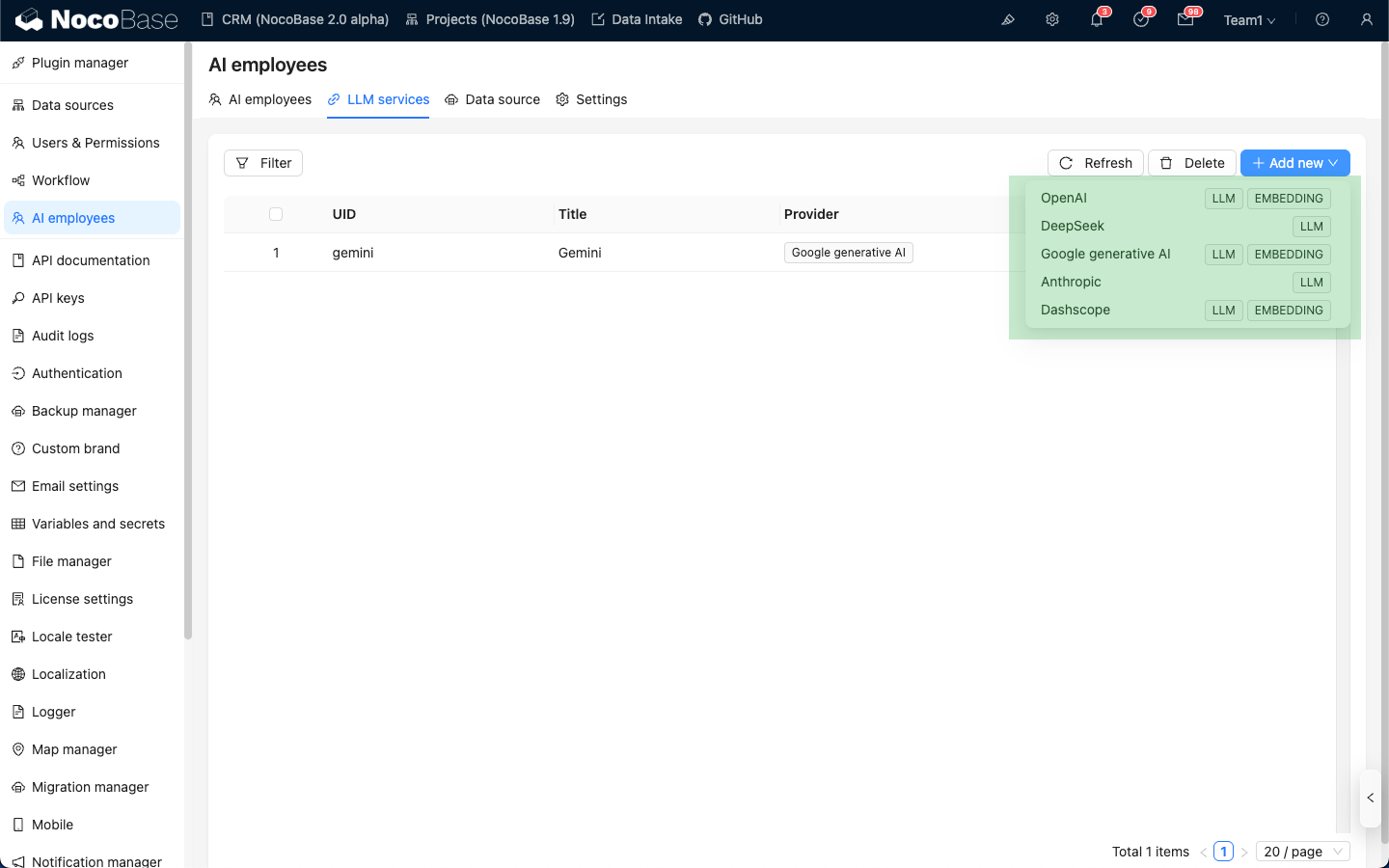

Go to the AI Employee plugin configuration page, click the LLM service tab to enter the LLM service management page.

Hover over the Add New button in the upper right corner of the LLM service list and select the LLM service you want to use.

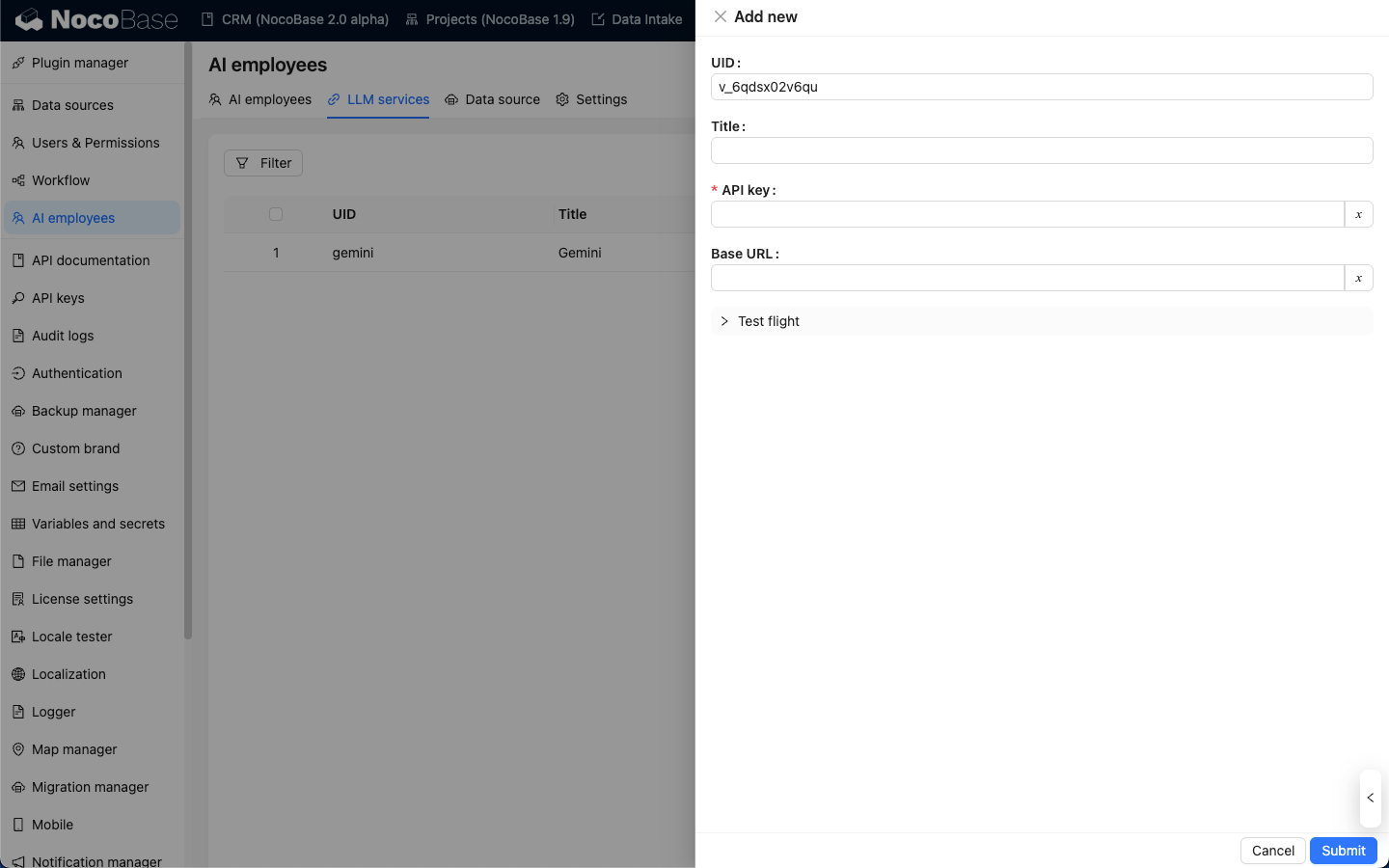

Taking OpenAI as an example, enter an easy-to-remember title in the pop-up window, then enter the API key obtained from OpenAI, and click Submit to save. This completes the LLM service configuration.

The Base URL can usually be left blank. If you are using a third-party LLM service that is compatible with the OpenAI API, please fill in the corresponding Base URL.

Availability Test

On the LLM service configuration page, click the Test flight button, enter the name of the model you want to use, and click the Run button to test whether the LLM service and model are available.